I’ve avoided writing about it, because good data on its energy consumption and climate implications is hard to get (see our podcast episode with Sasha Luccioni), and without decent data, I’m just wand-waving.

But a few things made me think I should finally say something. I doubt it will be the last article I write on the topic because things are evolving quickly.

First, the International Energy Agency (IEA) recently published its landmark World Energy Outlook 2024 report. It suggests that energy demand for data centres and AI will still be pretty small for the next five years at least. I read it as them saying: “Everyone just needs to chill out a bit.” But in a more diplomatic way.1

Second, we’ve been here before with fears around uncontrollable growth in energy demand for data centres. It’s worth looking at why the doom scenario didn’t come true and what similarities (or differences) we’re facing today.

Third, the public and media conversation on this has been poor. I lose track of the number of headlines and articles I’ve seen that quote random numbers—without context—to show how much energy or water AI already uses. The numbers are often fairly small but sound big because they’re not given with any context of how much energy or water we use for everything else.

Here I’ll look at recent estimates of how much electricity data centres use today, what some medium-term projections look like, and how to square this with recent announcements from tech companies.

A few percent of the world’s electricity, at most.

It’s not as easy to calculate this as you might think. Companies with data centres don’t simply report the electricity use of their servers, and we tally that up to get a total. Researchers try to estimate the energy use of these processes using both bottom-up and top-down approaches. While they come up with slightly different estimates, they tend to cluster around the same outcome.2

Data centres use around 1 to 2% of the world’s electricity. When cryptocurrency is included, it’s around 2%.

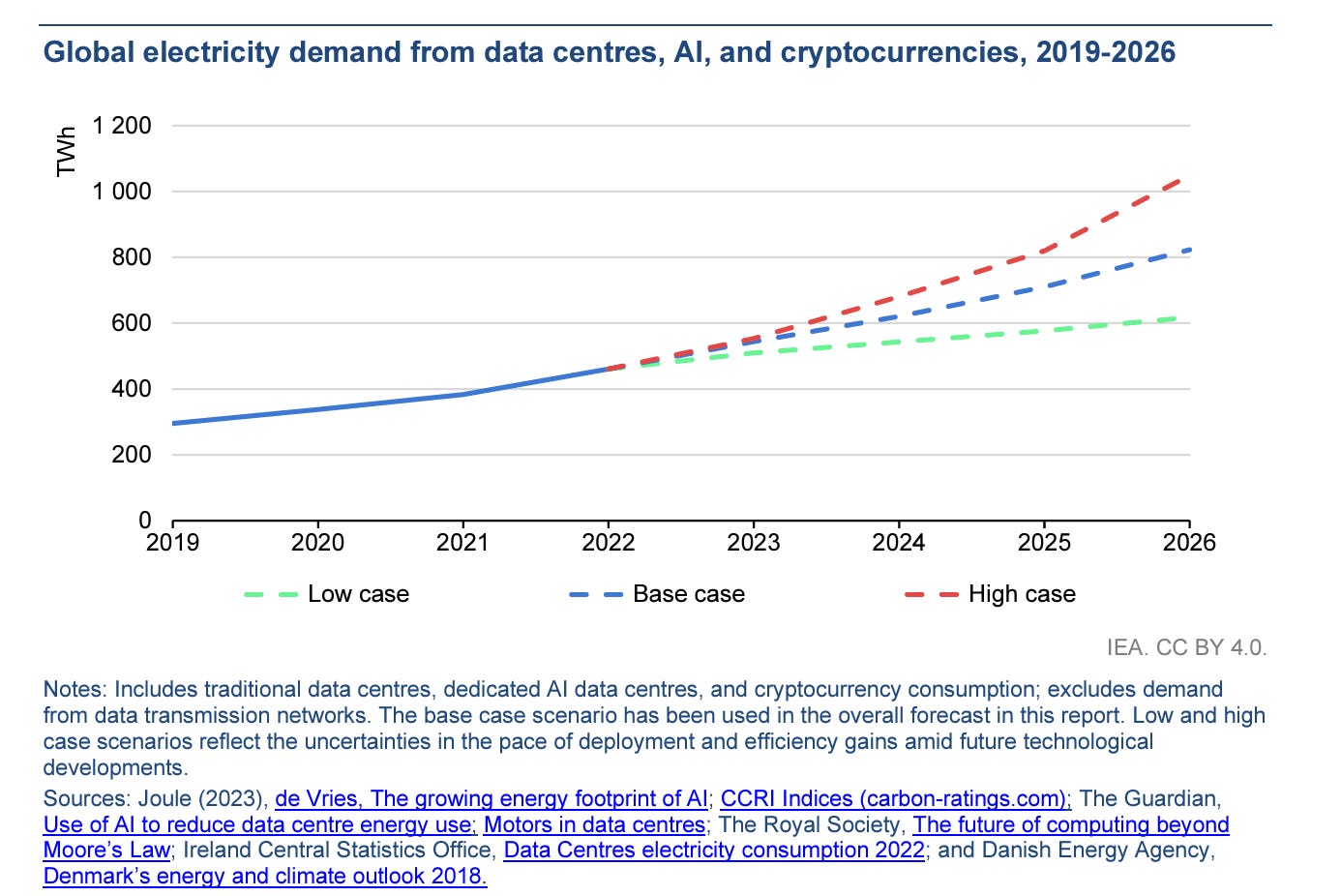

In its annual electricity report, published in January this year, the IEA estimated that data centres, AI and cryptocurrencies consumed around 460 terawatt-hours (TWh) of electricity. That’s just under 2% of global electricity demand.

You’ll notice that this figure is for 2022, and we’ve had a major AI boom since then. We would expect that energy demand in 2023 and 2024 would be higher than this. But not significantly more. As we’ll see in the next section, the IEA’s projected increase in demand to 2030 is not huge. So it must expect that the increase in 2023 and 2024 is even smaller.

Researcher, Alex de Vries, was one of the first to try to quantify the energy footprint of AI in the last few years.3 One way to tackle this is to estimate how much energy could be consumed by NVIDIA’s server sales. NVIDIA completely dominates the AI server market, accounting for around 95% of global sales.

De Vries estimated how much energy would be used if all of the servers delivered in 2023 were running at full capacity. It came to around 5 to 10 TWh; a tiny fraction of the 460 TWh that is used for all data centres, transmission networks and cryptocurrency.

How Artificial Intelligence Will Change the World of Wine, From Vineyard to Wine Glass

How Artificial Intelligence Will Change the World of Wine, From Vineyard to Wine Glass

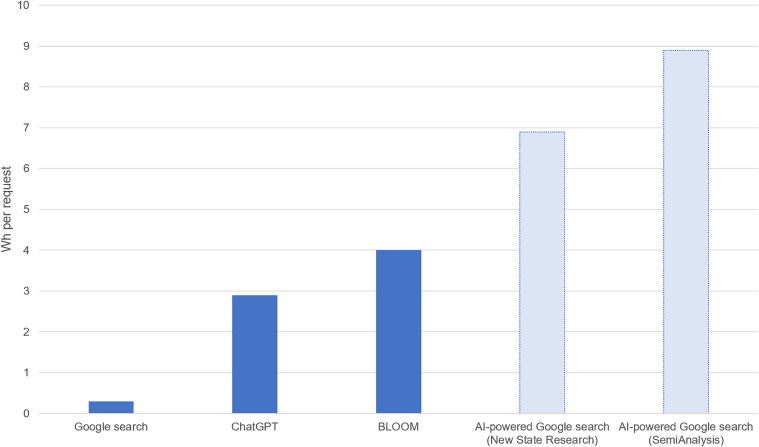

Another way to estimate the bounds of energy use is to look at how much energy would be needed if search engines like Google were to switch to LLM-powered results. It’s thought that an LLM search uses around ten times as much energy as a standard Google Search (see the chart below).4

De Vries estimated that if every Google search became an LLM search, the company’s annual electricity demand would increase from 18 to 29 TWh. Not insignificant, but not huge compared to the total energy demand of data centres globally. One key thing to note is that this speed of transition for Google seems very unlikely, not least because NVIDIA would probably not be able to produce servers quickly enough. The production capacity of servers is a real constraint on AI growth.

While data centres and AI consume only a few percent of global electricity. In some countries, this share is much higher. Ireland is a perfect example, where data centres make up around 17% of its electricity demand. In the US and some countries in Europe, it’s higher than the global average, and closer to 3% to 4%. As we’ll see later, energy demand for AI is very localised; in more than five states in the US, data centres account for more than 10% of electricity demand.

While people often gawk at the energy demand of data centres, I think they’re an extremely good deal. The world runs on digital now. Stop our internet services, and everything around us would crumble. A few percent of the world’s electricity to keep that running seems more than fine to me.

How much energy could AI use in the future?

Of course, we don’t know. And I’d be very sceptical of any projections more than a decade into the future. Even five years is starting to get highly speculative.

Last month, the International Energy Agency (IEA) published its latest World Energy Outlook report. It’s packed with interesting insights, but it was its projections for data centres that caught many peoples’ attention. They were, well, a bit underwhelming.5

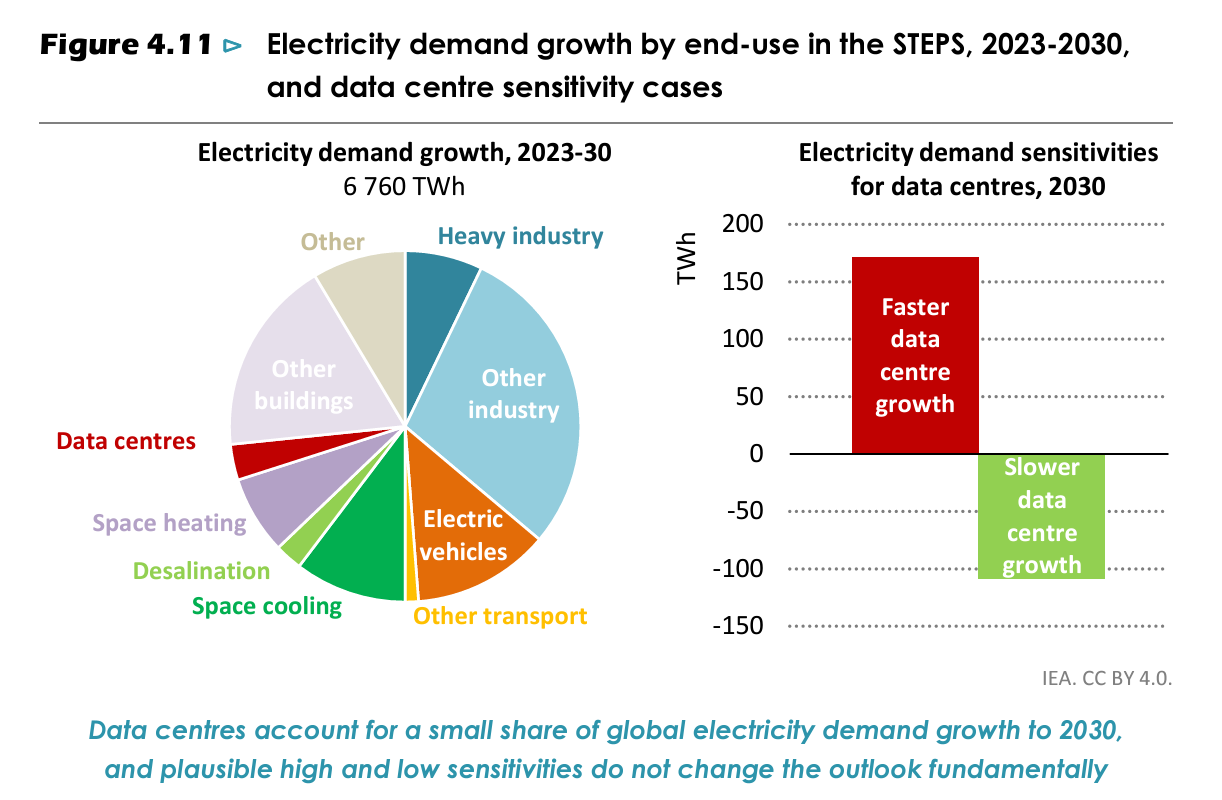

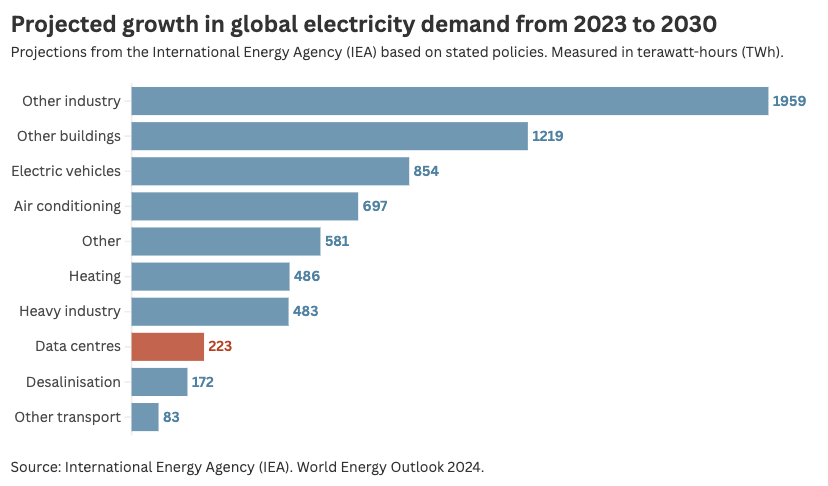

The IEA published projections for how much it expected electricity demand to grow between 2023 and 2030. You can see the drivers of this increase in the chart below.6

Data centres made up just 223 TWh of the more than 6000 TWh total. It accounted for just 3% of the demand growth.

Other things, such as industry, electric vehicles, and increased demand for air conditioning (and incidentally, more demand for heating) were much more important.

Data centres were not much more than desalinisation, which I wrote about previously.

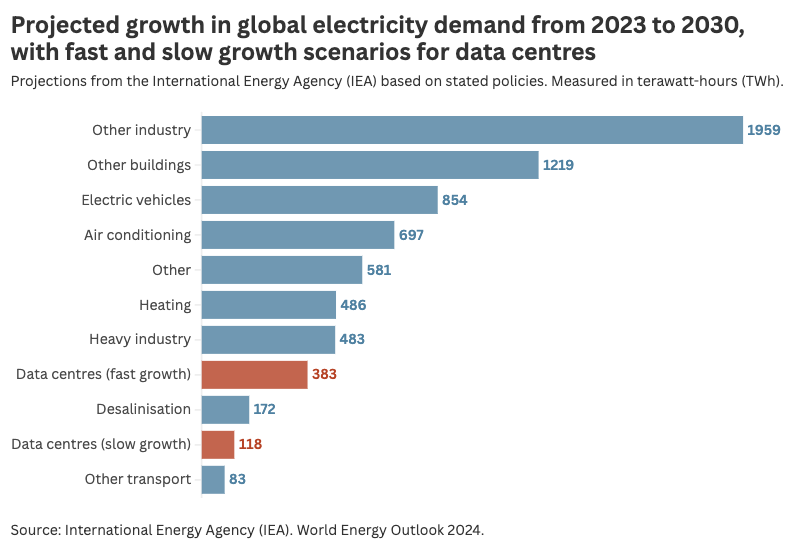

These projections are very uncertain. The IEA tried to put bounds on the sensitivity of these estimates by publishing “fast AI growth” and “slow AI growth” scenarios. In the chart below I’ve shown where these would rank. Even in the fast growth scenario, data centres don’t move up the list of the big drivers of electricity demand.

It’s worth noting that this is lower than the IEA’s earlier projections. And by earlier, I mean in January this year. In its 2024 electricity report, it thought that energy demand could double by 2026 (see below). The increase by 2030 would be even higher.

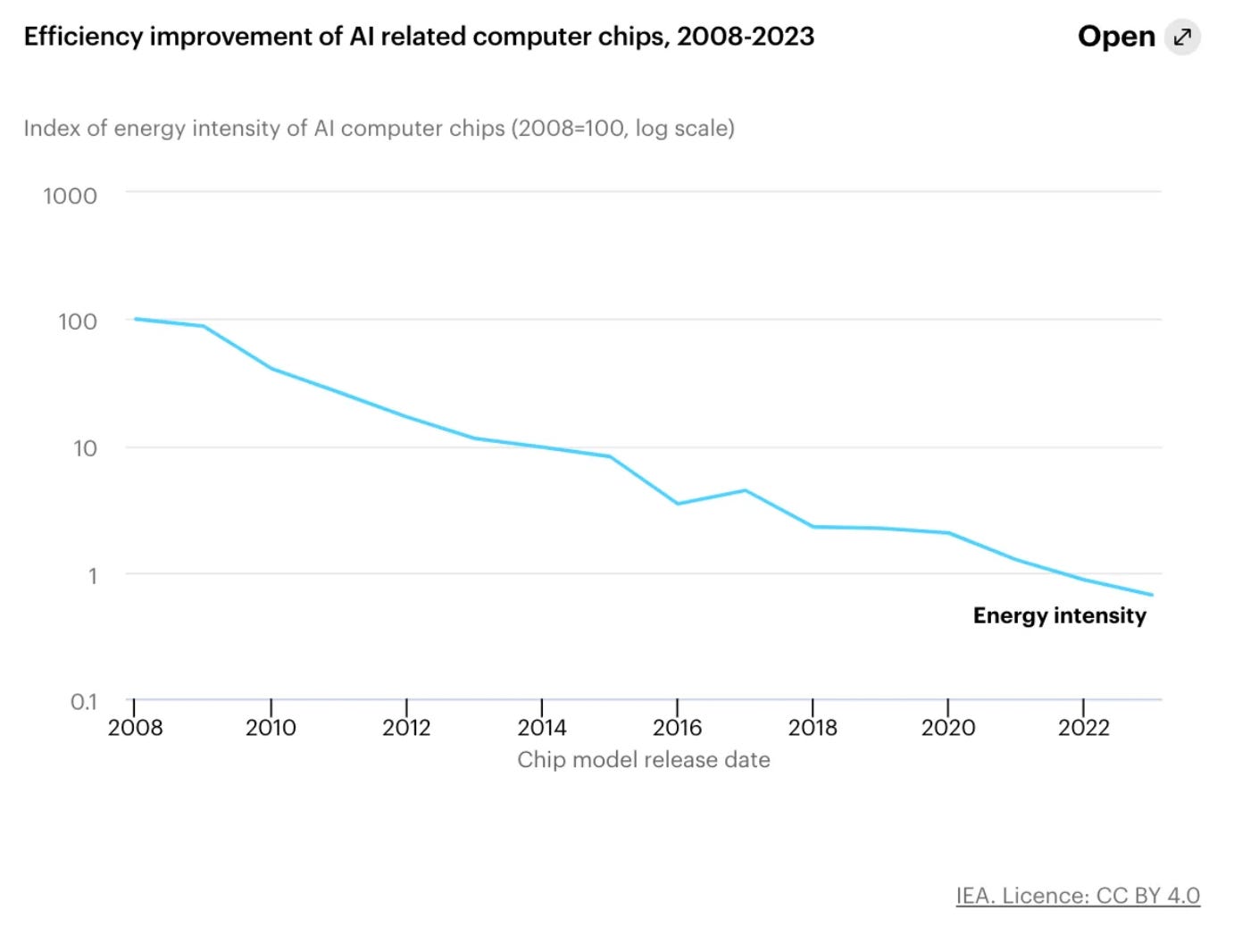

You might wonder how these estimates can be so low when the demand for AI itself is booming. Well, the efficiency of data centres has also been improving rapidly.

In the chart below you can see the efficiency improvement in computer chips. It’s on a log scale. The energy intensity of these chips is less than 1% of what it was in 2008.

We’ve been here before with fears around data centres

People have been predicting that the energy demand for computers and the internet will skyrocket for a long time.

From a 1999 article in Forbes: “Dig more coal — the PCs are coming”:

“The global implications are enormous. Intel projects a billion people on-line worldwide. [...] One billion PCs on the Web represent an electric demand equal to the total capacity of the U.S. today.”

Of course, demand for digital services and data centres did skyrocket. They were right about that. If you were to take the expected growth in internet technologies since 2010 and assume that energy demand would follow, then you do get pretty scary numbers.

But energy demand did not follow in the same way. That’s because it was curbed by the huge efficiency gains we just looked at.

Between 2010 and 2018, global data centre compute increased by more than 550%. Yet energy use in data centres increased by just 6%.7

This follows on from “Koomey’s Law” — named after the researcher Jonathan Koomey — which describes the dramatic increase in computations you could carry out per unit of energy.

But tech companies are investing a lot in energy — doesn’t this mean demand is going to skyrocket?

Something’s not adding up here, though. The IEA and other researchers suggest that the energy demand growth for AI will be notable but not overwhelming. But in the background, tech companies are scrambling to invest in reliable, low-carbon energy. Microsoft has made a deal to reopen Three Mile Island.

An entire nuclear power plant to power one company’s operations? That seems big. At least until you run the numbers.

In its prime, Three Mile Island was producing around 7.2 TWh each year.8 The US produces 4,250 TWh of electricity each year. That means Three Mile Island is just 0.2% of the total. Or 0.02% of the global total.9

So, both things can be right at the same time: Microsoft’s data centres could be powered entirely by Three Mile Island and this would just be a rounding error on US or global electricity demand.

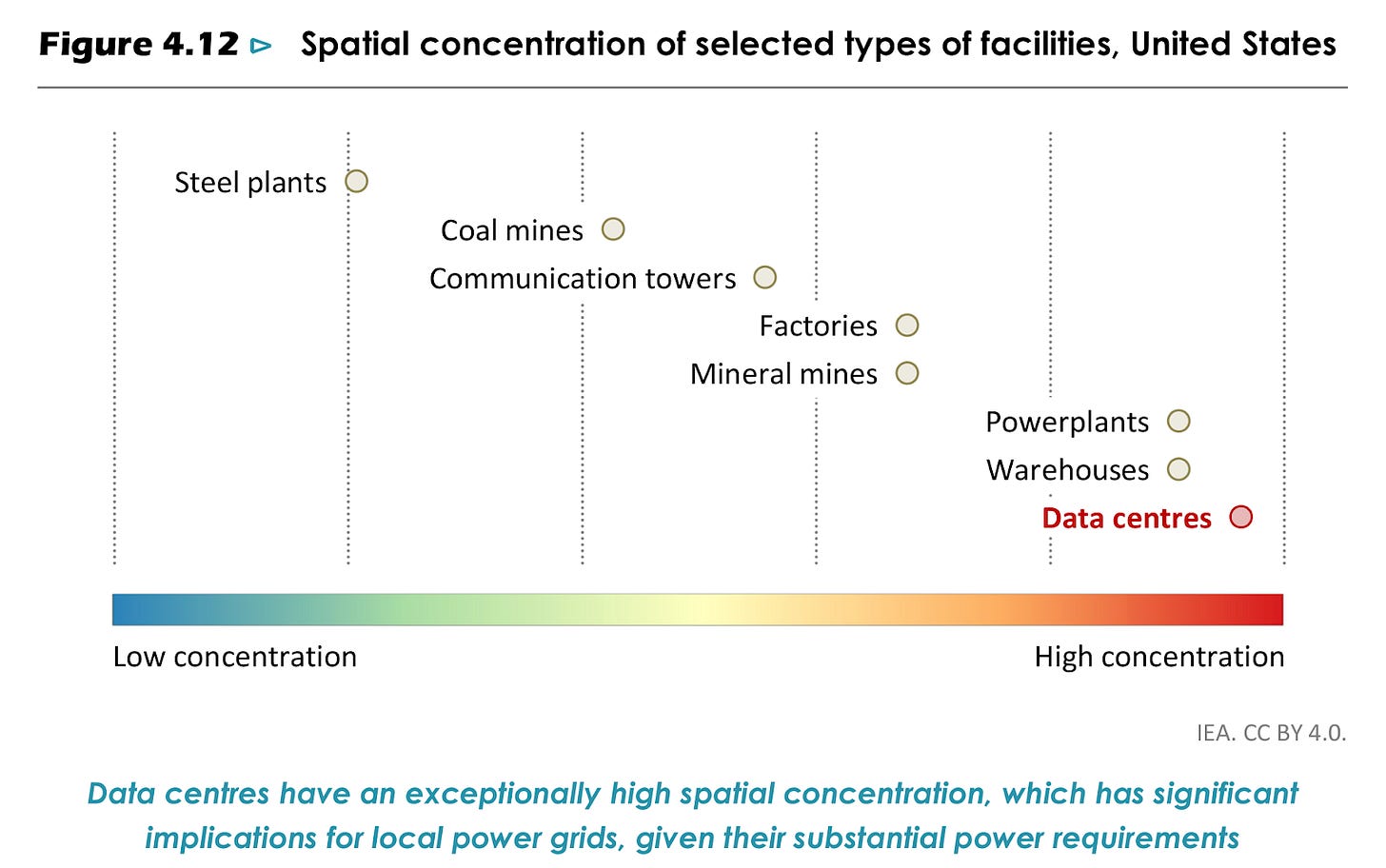

What’s crucial here is that the energy demands for AI are very localised. This means there can be severe strain on the grid at a highly localised level, even if the impact on total energy demand is small.

As the IEA shows in its recent report, the spatial concentration of energy demand is extremely high for data centres — far higher than other types of facilities — which is one of the reasons why you see a mismatch in the concerns of tech companies at the local level, and the numbers on a global scale.

Another key mismatch that companies are grappling with is speed. Demand for AI is growing quickly, and data centres can be built relatively quickly to serve that. Building energy infrastructure is much slower (especially if you’re trying to build a large-scale nuclear plant in the US). That means energy supply really can be a bottleneck, even if the total requirements at a national or global level are not huge.

Trends in AI and energy demand are still very uncertain

The future energy demand for AI is still very uncertain. There’s nothing to say that expectations from organisations like the IEA will be right. They’ve been very wrong about other technology trends in the past; notably underestimating the growth of solar PV and electric vehicles.

I don’t want to paint the picture that AI energy demand is a “nothingburger” because we don’t know how these trends are going to evolve.

Having more transparency from tech companies on current energy demands — and how they’re changing over time — would allow researchers to keep up. I’m far from the first to say that.

Continued improvement in the efficiency of hardware will also be crucial.

Despite efficiency improvements, they won’t be able to keep up with the growth in demand for AI. I haven’t seen any estimates that expect energy demand to stay where it is today. Energy demand will grow. But possibly less than many assume.

Here’s the main figure on this from the IEA’s report. Note the caption: “Data centres account for a small share of global electricity demand growth to 2030, and plausible high and low sensitivities do not change the outlook fundamentally.”